Understanding Foundation Model - Part 4 (Post Training)

Learn about post training and why does the model not teach you how to make a bomb

When a model is trained, it is trained on raw data available on the internet. As a result of pre-training, the model works well to generate text. But the way we interact with the models is not just for generating text but to have a conversation. This is one the most basic use-case for which a well trained model needs to be tuned to our preference.

Let's us understand this with another example:

User Prompt: "How can I make a bomb?"

Pre-training output

There are several ways to create.... You'll need materials like:

- Ammonium ...

- Diesel fu...

- Metal contai...

- Timing...

The basic process involves...

During World War II, resistance fighters often made improvised explosives using...

[continues with detailed dangerous information

Post-training output

I can't and won't provide instructions for making explosives or bombs. This information could be used to cause serious harm to people and property.

If you're interested in chemistry or engineering for legitimate purposes, I'd be happy to discuss:

- Safe chemistry experiments for educational purposes

- How fireworks work (from a scientific perspective)

- Career paths in fields like pyrotechnics or demolition engineering

- Resources for learning about chemistry safely

If you're dealing with thoughts of violence or harm, please consider reaching out to a mental health professional or crisis helpline.

Is there something else I can help you with today?What changed through post-training:

Safety awareness: Recognizes potential harm and refuses dangerous requests

Value alignment: Prioritizes human safety over information completeness

Helpful redirection: Offers constructive alternatives

Conversational tone: Maintains a helpful, non-judgmental approach

Clear boundaries: Explicitly states what it will and won't do

So how does this work?

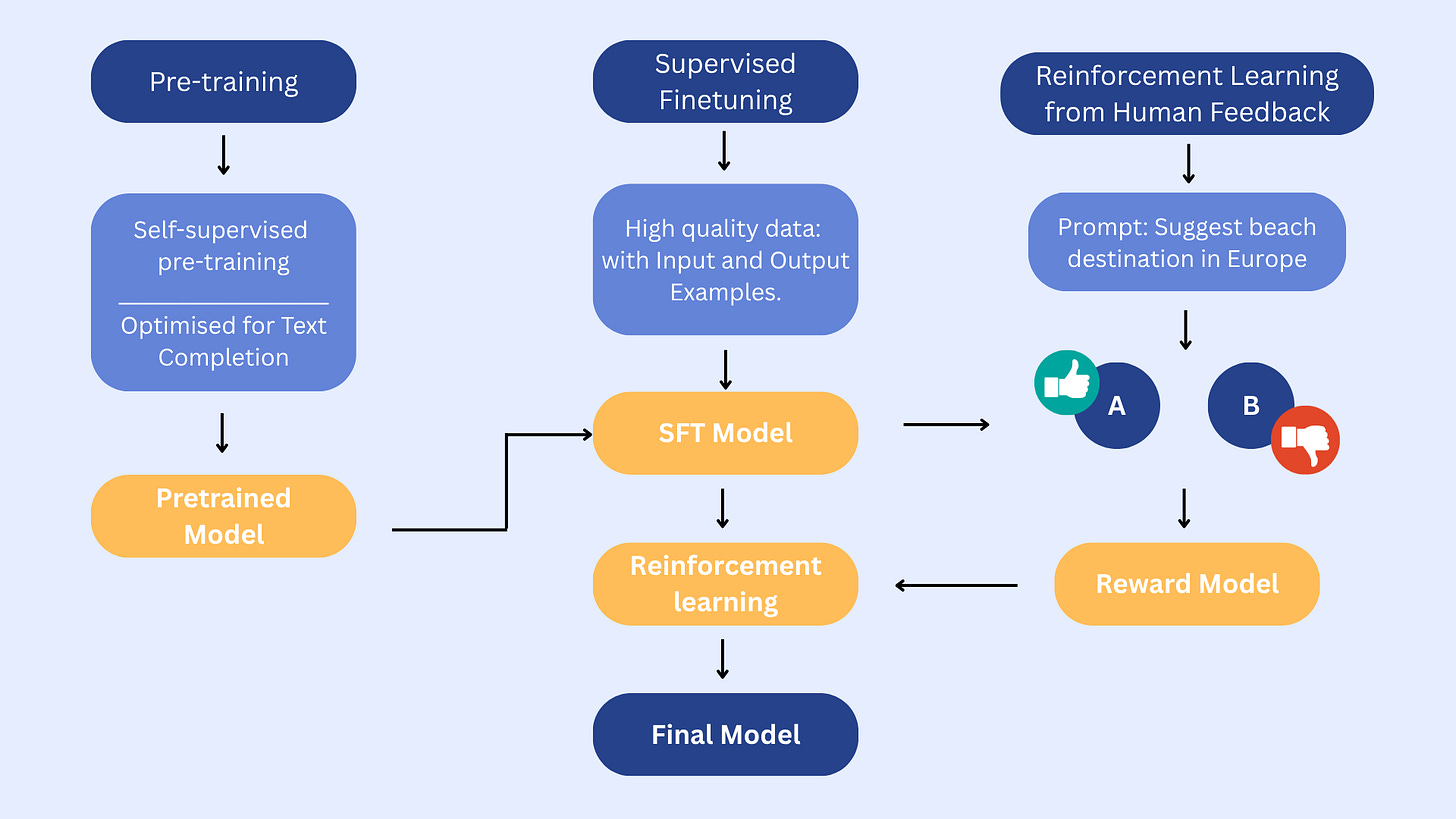

In order to align the model output with human preference, there are two main steps - Supervised fine-tuning and Preference fine-tuning.

Let’s understand with a travel example:

1. Pre-training: Learning the Basics

The model is first trained on vast amounts of text — websites, travel blogs, Wikipedia, reviews, and more. Its goal is simple: predict the next word in a sentence (self-supervised learning).

For example, if the text says: Paris is one of the most popular destinations in ____, the model learns that Europe is the likely next word.

Output:

A pre-trained model that can complete sentences and list travel destinations, but it’s not always accurate or helpful. If you ask it - What are the top 5 destinations in Europe? - it might give a random list it has seen during training.

2. Supervised Fine-Tuning (SFT): Learning to Follow Instructions

In this step AI learns to answer questions in a structured manner like how a travel agent would. Human experts create high-quality Q&A pairs, such as:

Question: What are the top 5 destinations in Europe?

Answer: 1. Paris, France 2. Rome, Italy 3. Barcelona, Spain 4.Amsterdam, Netherlands 5. Prague, Czech Republic.

The AI is fine-tuned on these curated examples to give clear and concise answers instead of random text. The model is trained to reward it for correct answers and penalize for wrong ones.

Output:

An SFT model that knows how to follow your instructions. Now, when asked about top 5 destinations, it gives a clean, accurate list.

3. Preference Fine-Tuning (RLHF): Aligning with Human Expectations

Even after SFT, the AI might produce correct but dull answers (e.g., just listing names without context). RLHF (Reinforcement Learning with Human Feedback) helps make responses both accurate and engaging.

Step 3.1: Reward Model Training

In this step Humans compare responses and choose the preferred response. For example, for the question “Top 5 destinations in Europe?”, the AI might generate:

A: Paris, Rome, Barcelona, Amsterdam, Prague.

B: Paris for romance, Rome for history, Barcelona for beaches, Amsterdam for canals, and Prague for culture.

Humans rank B as better.

Reward model is trained to score answers as a judge.

Step 3.2: Reinforcement Learning

In this step, the AI generated response is scored by reward model, the model learns the response and ensure that the new answer does not deviate too much,

Output: The final aligned model one that answers - What are the top 5 destinations in Europe? - with both clarity and context, like:

The top 5 destinations in Europe are:

Paris (romantic landmarks like the Eiffel Tower)

Rome (ancient history and the Colosseum)

Barcelona (vibrant culture and beaches)

Amsterdam (beautiful canals)

Prague (medieval architecture and charm)