Tokens - The "a","b","c" of LLM

Understand the building blocks of large language models

When I first started working with large language models and starting out with AI use case, I kept seeing this one technical term: token. It was not an incidental finding but the one that impacted the wallet. Every experiment we ran from parsing the travel documents to transcribing the calls had associated cost. It was natural to understand how this cost is calculated and that is when I came across the term token.

Everywhere I looked — in OpenAI pricing docs, in LLM performance benchmarks, even in random Twitter threads — someone was talking about tokens.

“100k token context window!”

“Costs 3 cents per 1,000 tokens.”

“Token limits affect your prompt size!”

It felt like there was this secret vocabulary no one had properly explained.

So let’s fix that. If you’re just starting with AI/ML or working around these models as a product manager, researcher, or engineer — this post is your go-to explanation of what tokens are, why they matter, and how they affect everything from model performance to your monthly bill.

What Is a Token?

A token is the basic unit of text that a language model reads and writes.

But here’s the interesting part: it’s not always a full word.

Let’s say you input:

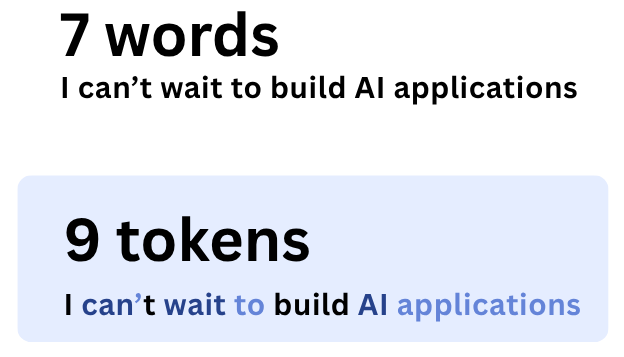

“I can’t wait to build AI applications.” You might assume that’s 7 words. But in the eyes of GPT-4, that breaks into 9 tokens

Depending on how the model is designed, even a single word like “unhappiness” might become three tokens:["un", "happiness"] or ["un", "happi", "ness"].

Why break it like this? Because it helps models deal with everything from common words to totally new or made-up ones. Even if you invent a term like “WhatsApping”, the model can break it into known parts — “WhatsApp”, and “ing” — and still make sense of it.

This process is called tokenization — and it’s the first step every time you use an LLM.

It is natural to think why not just use words or letters. There are three key reasons why modern AI models use tokens instead of full words or single characters:

How Do Models Use Tokens?

Let’s take an example.

When you type a sentence into ChatGPT, the model doesn’t see your sentence as one chunk of text. It sees a sequence of tokens.

Input sentence: “I can’t wait to build awesome AI applications.”

Tokens: [“I”, “can’t”, “wait”, “to”, “build”, “awesome”, “AI”, “applications”, “.”]

Now, the model uses these tokens to figure out what should come next. It doesn’t work at the sentence level — it works token by token.

Based on the context of just "I can", a language model will look at all the data it's been trained on and predict the most likely next token — essentially, how people usually complete that sentence.

Examples of possible next tokens:

" do" → "I can do"" help" → "I can help"" see" → "I can see""not" → "I cannot" (depending on spacing)" run" → "I can run"“‘t” → “I can’t”

Each of these next tokens has a probability score. The model picks the one with the highest likelihood — or samples from the top options if randomness is added (using a parameter like temperature). Hence, generating a text takes lot of computing.

This process is called Tokenization

Tokenization is just the act of breaking a sentence into tokens.

Every large language model — GPT, BERT, Claude, Gemini — uses some form of tokenization as the first step. Without it, they can’t understand or generate text.

Each model has its own rules about how it splits up text. For example GPT-4 uses a method that typically gives you about 75 words per 100 tokens. Whereas some models break common phrases into a single token. Others break rare or made-up words into multiple parts.

GPT-4 has a 32,000 token limit which mean that’s the maximum amount of text the model can handle in one go. It includes both your input and the model’s output. So if you feed in a 20,000-token-long document, the model might only have room for 12,000 tokens in its reply. That’s why longer prompts can cut short responses.

One Word is not equal to one Token

Many of us assume one word equals one token which is not true in all cases.

On average:

1 token ≈ ¾ of a word (for GPT 4)

So, 100 tokens ≈ 75 words

This is important to understand because when you are using the API, token usage impacts speed and cost. When training models, token count defines how much data the model sees. Every model has a fixed vocabulary of tokens it can understand. That vocabulary could be 32,000 tokens (like GPT-4) or 100,256 tokens (like Mistral 8x7B)

The model can use these tokens to build any sentence, much like how we use the alphabet to create words. A larger vocabulary lets the model be more expressive, but also more complex and costly to train.

Before a model can think, write, or answer — it has to tokenize. Understanding tokens will help you:

Use APIs better

Interpret model outputs

Optimize cost and performance

And eventually, train your own models

So next time you type into ChatGPT, pause for a moment and think - what goal do you want to achieve and how will token impact your overall product design and costs.